This design lets developers build, test, and deploy voice agents in minutes rather than months.

addressing real-world challenges such as natural conversation flow, low-latency responsiveness (<500-800ms voice-to-voice), and scalability for enterprise use cases.

Data Flow Summary

1

Audio Capture

Captures user audio via phone, web, or mobile interfaces.

2

Pre-Processing

Filters noise and background voices for clarity.

3

Transcription & Endpointing

Transcribes speech to text and detects pause points.

4

LLM Processing & Orchestration

Processes text with LLM, handling interruptions, backchannels, and emotions.

5

Speech Synthesis

Converts text to speech with natural fillers for smooth flow.

6

Output Delivery

Streams final audio back to the user with low latency.

Orchestration & Advanced Conversation Models

Stop frustrating customers & boost conversions in every Digital Touchpoint of your customer journey with SpiderX AI's Neural Voice search & AI assistant.

Infrastructure & Developer

Experience

Backend Technologies

Event-Driven Architecture:

Built on Node.js (using frameworks like NestJS), SpiderX AI efficiently handles asynchronous audio streams (audio packets arriving every ~20 ms) and manages continuous real-time interactions.

Containerization & Orchestration:

Utilizes Kubernetes for container orchestration and services like Lummi for streamlined infrastructure management.

Data Persistence:

PostgreSQL is used for robust data storage, ensuring reliability and consistency.

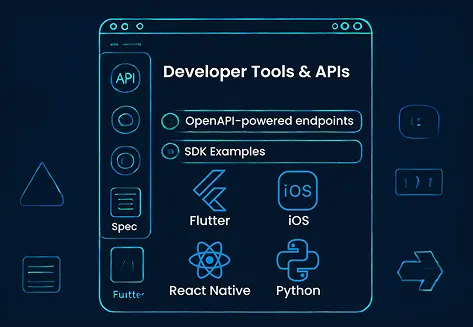

Developer Tools & APIs

OpenAPI Specifications:

SpiderX AI publishes an open API spec with comprehensive documentation, enabling seamless integration and quick prototyping.

SDKs & Dashboard:

The platform offers multiple SDKs (for web, iOS, Flutter, React Native, Python) along with an intuitive dashboard, making deployment and testing straightforward.

Modular Integration & Flexibility

Custom Provider Support

SpiderX AI’s architecture is modular, meaning that developers can mix and match different providers for each pipeline component. For example, you might choose one vendor for ASR, another for LLM processing, and yet another for TTS.

Low-Latency & Scalability

The system is engineered for international, real-time voice interactions with response times under 800 ms. Its scalable design leverages orchestration strategies and modern cloud infrastructure to ensure reliability and fault tolerance.